Retrieving information from schema registry

This scenario explains how to retrieve flight information from schema registry using tKafkaInputAvro in your Spark Streaming Jobs.

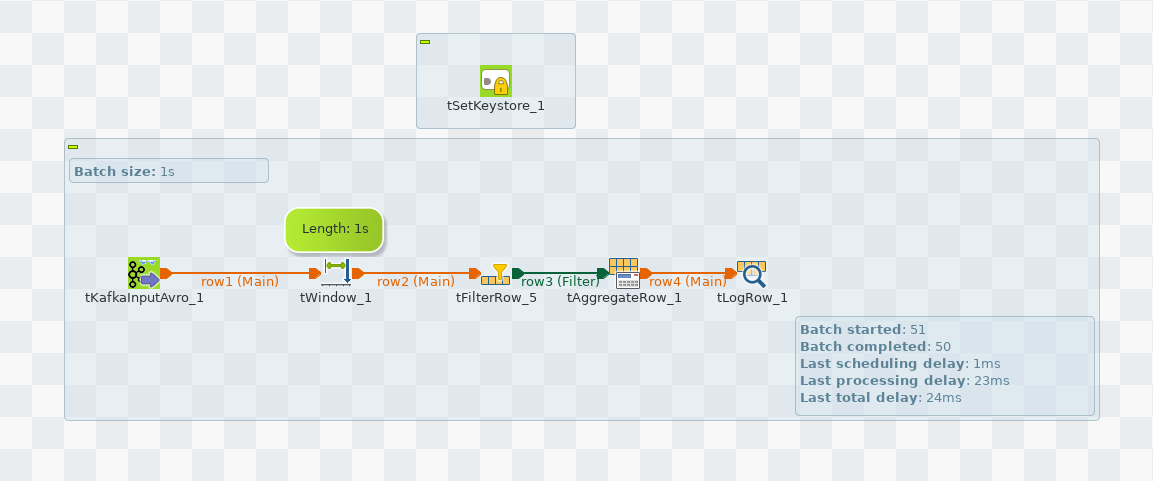

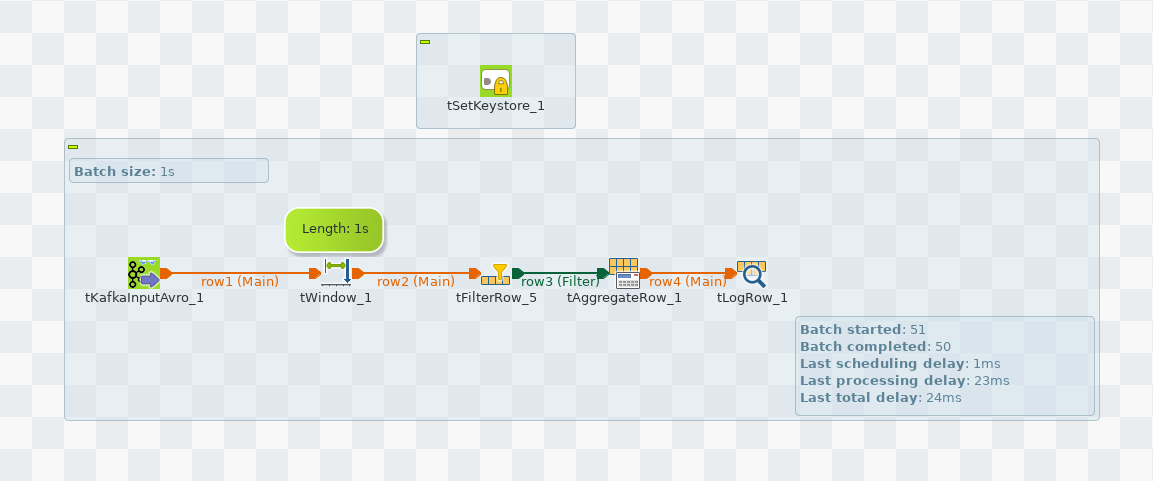

In this scenario, you create the following Spark Streaming Job:

Before replicating this scenario, you need to ensure that your Kafka system is up and running and you have correct rights and permissions to access the Kafka topic to be used.

This scenario retrieves data from the following Avro

schema:

{

"type": "record",

"name": "flightRecord",

"namespace": "flightInformation",

"fields": [

{

"name": "flightNumber",

"type": "string",

},

{

"name": "departure",

"type": "string",

},

{

"name": "destination",

"type": "string",

},

{

"name": "nbPassengers",

"type": "int",

},

{

"name": "aircraftSize",

"type": "string",

}

]

}This scenario retrieves data from the following Avro

messages:

{"flightNumber":"OMP45","departure":"Paris","destination":"Athens","nbPassengers":120,"aircraftSize":"Medium"}

{"flightNumber":"FGH34","departure":"Paris","destination":"Oslo","nbPassengers":122,"aircraftSize":"Medium"}

{"flightNumber":"XHK20","departure":"Madrid","destination":"Buenos Aires","nbPassengers":247,"aircraftSize":"Large"}

{"flightNumber":"TUI09","departure":"Zurich","destination":"Johannesburg","nbPassengers":322,"aircraftSize":"Large"}

{"flightNumber":"CDI03","departure":"Frankfurt","destination":"New-York","nbPassengers":366,"aircraftSize":"Large"}

{"flightNumber":"JKF77","departure":"Paris","destination":"Los-Angeles","nbPassengers":380,"aircraftSize":"Large"}

{"flightNumber":"LBZ23","departure":"London","destination":"Shanghai","nbPassengers":416,"aircraftSize":"Large"}

{"flightNumber":"NSV50","departure":"London","destination":"Vienna","nbPassengers":95,"aircraftSize":"Small"}

{"flightNumber":"LRS12","departure":"Roma","destination":"Rio de Janeiro","nbPassengers":395,"aircraftSize":"Large"}

{"flightNumber":"ALJ67","departure":"Roma","destination":"Warsaw","nbPassengers":102,"aircraftSize":"Small"}

Note that the sample data is created for demonstration purposes only.

This scenario applies only to Talend Real-Time Big Data Platform and Talend Data Fabric.